- MNE-Python version: 0.24.0

- operating system: Linux

Hi! guys,

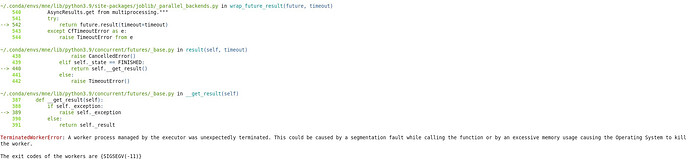

Recently I’m doing decoding using the cross_val_multiscore(), when I set the n_jobs >1, like n_jobs = 2 or other values, the same error always was raised:

Here are my code snippets:

labels_fea_col = epoch.metadata['feature_color']

labels_fea_ani = epoch.metadata['feature_animacy']

labels_fea_siz = epoch.metadata['feature_size']

n_cvsplits = 10

cv = StratifiedKFold(n_splits=n_cvsplits)

n_jobs = 8

clf = make_pipeline(

StandardScaler(), # Z-score data, because gradiometers and magnetometers have different scales

LogisticRegression(random_state=0, n_jobs=n_jobs, max_iter=500)

)

# pick channels

# chnnel names ending with 1: mag; ending with 2 or 3: grad.

# ch_names = epoch.info['ch_names']

info = epoch.info

hemi = ['Left', 'Right']

rois = ['frontal', 'parietal', 'temporal', 'occipital']

fea_types = ['col', 'ani', 'siz']

for h in hemi:

for r in rois:

# acquire epochs of each roi

globals()[f'picks_{h}_{r}'] = read_vectorview_selection('{}-{}'.format(h,r), info=info)

globals()[f'epochs_{h}_{r}'] = epoch.copy().pick_channels(globals()[f'picks_{h}_{r}'])

# get data from epochs

globals()[f'epo_data_{h}_{r}'] = globals()[f'epochs_{h}_{r}'].get_data()

np.save(result_path + 'epo_data/sub-{}_epodata_2.5s_per_task_{}_{}_{}.npy'.format(f'{subj_id:03}', task_type, h, r), globals()[f'epo_data_{h}_{r}'])

# shape: (n_trials, n_channels, n_times),e.g., (102, 39, 500) for subj14.

for f in fea_types:

globals()[f'slid_{h}_{r}_fea_{f}'] = SlidingEstimator(clf, n_jobs=n_jobs, scoring='roc_auc', verbose=True)

# =============== get auc ===============

globals()[f'auc_{h}_{r}_fea_{f}'] = cross_val_multiscore(globals()[f'slid_{h}_{r}_fea_{f}'], globals()[f'epo_data_{h}_{r}'], globals()[f'labels_fea_{f}'], cv=cv, n_jobs=n_jobs)

I checked the memory usage when the code was running, it didn’t take much memory, so it’s not the excessive memory usage problem as the raised error implied. Back in the day, I set the n_jobs >1, like n_jobs = 20, it works fine, I’m veryyyyy confused why this cannot work now?

Help please